From Rossum’s Robots to Artificial Intelligence

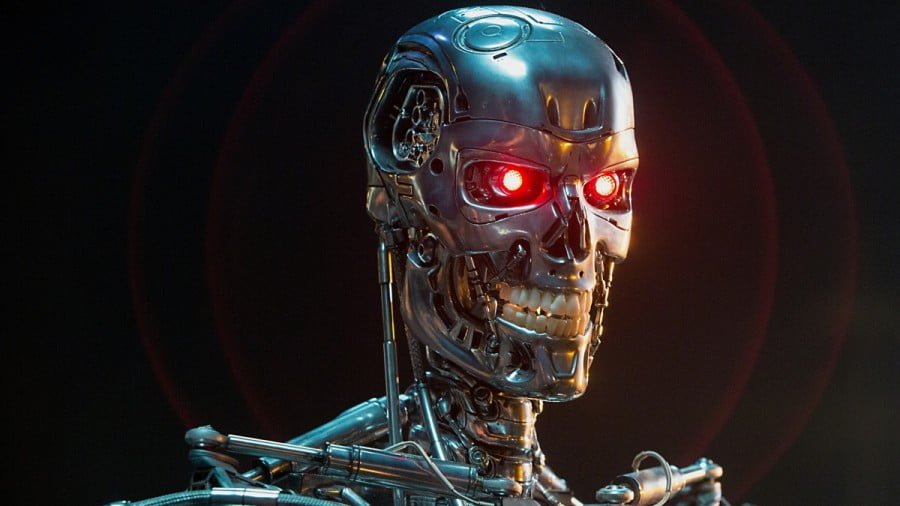

25 January 2021 marked one hundred years since the première of Czech sci-fi writer Karel Čapek’s play R.U.R. (Rossum’s Universal Robots). The short work anticipated subsequent books on the subject, as well as cyberpunk and post-apocalyptic films like The Terminator and Alien: Covenant. Rossum’s universal robots were conceived as human helpers, but after a while they rebel and destroy the human race, with the exception of one factory worker, whom they need to recreate their own kind.

The word “robot” soon became commonplace and was applied to mechanisms with a limited set of programmable functions in need of diagnostics, servicing and repair. More recently, however, especially after the development of computers and cybertechnologies, discussions are already underway about whether machines can think and make decisions on an equal footing with people.

Nowhere are the latest achievements in robotics and computerisation more in demand than in the military, especially in the US, where special centres have been set up to develop specific programmes, applications and hardware. Numerous laboratories in the US Army, Marine Corps, Navy and Air Force, with the help of contractors and the country’s leading institutions, bring the prototypes of advanced models to their logical conclusion – all this technology is to serve the new wars that Washington is planning to unleash in the future.

Recent advances in this area are telling.

The unmanned Ghost Fleet Overlord vessel recently sailed 4,700 nautical miles successfully and participated in the Dawn Blitz exercise, where it operated autonomously for almost the whole time.

Those concerned about China’s growing power propose using such systems for any future dealings with the PLA: using underwater suicide drones to attack Chinese submarines, for example. The US is already talking about underwater and surface combat robots that are allegedly under development by the Chinese military and which the Chinese are calling an “Underwater Great Wall”. That is why they are suggesting to establish parity with or to somehow outplay the Chinese.

China’s efforts in this area show that the availability of new types of weapons does not provide the US with any guarantee that such systems will not be put into service by other countries. For example, the appearance of unmanned combat aerial vehicles in a number of countries has forced the US to develop methods and strategies for countering drones.

As such, in January 2021, the US Defense Department published a strategy for countering small unmanned aircraft systems that expresses alarm at the changing nature of war and the growing competition, both of which pose challenges to American superiority.

Lieutenant General Michael Groen, director of the US Defense Department’s Joint Artificial Intelligence Center, talks about the need to accelerate the implementation of artificial intelligence programmes for military use. According to Groen: “We may soon find ourselves in a battlespace defined by data-driven decision-making, integrated action, and tempo. With the right effort to implement AI today, we will find ourselves operating with unprecedented effectiveness and efficiency in the future.”

The Pentagon’s Joint Artificial Intelligence Center, which was set up in 2018, is now one of the leading military institutions developing “intelligent software” for future weapons, communications and command systems.

Artificial intelligence is now the most discussed topic in the US defence research community. It is a resource that can help achieve certain goals, such as enabling drones to fly unsupervised, gather intelligence, and identify targets through rapid and comprehensive analysis.

It is assumed that the development of artificial intelligence will lead to fierce competition, since AI itself differs from many past technologies in its natural tendency towards monopoly. This tendency towards monopoly will exacerbate both domestic and international inequality. The auditing firm PricewaterhouseCoopers predicts that “nearly $16 trillion in GDP growth could accrue from AI by 2030”, of which 70% will be accounted for by the US and China alone. If rivalry is the natural course of things, then for companies that use artificial intelligence for military purposes or dual-use technologies, viewing it this way will seem quite logical. It will be a new type of arms race.

On the ethical side, however, military artificial intelligence systems could be involved in making life-saving decisions or impose death sentences. They include both lethal autonomous weapons systems that can select and engage targets without human intervention and decision-making support programmes. There are people who actively advocate for the inclusion of machines in a complex decision-making process. The American scientist Ronald Arkin, for example, argues that not only do such systems often have greater situational awareness, but they are also not motivated by self-preservation, fear, anger, revenge or misplaced loyalty, suggesting that, for this reason, robots will be less likely to violate peace agreements than people.

Some also believe that artificial intelligence functions can transform the relationship between the tactical, operational and strategic levels of war. Autonomy and networking, along with other technologies, including nanotechnology, stealth and biogenetics, will offer sophisticated tactical combat capabilities on the ground, in the air, and at sea. For example, shoals of autonomous undersea vehicles concentrated in specific locations in the ocean could complicate the covert actions of submarines that currently ensure a guaranteed retaliatory strike from nuclear powers. Therefore, other tactical platforms could also have a strategic impact.

Enhanced manoeuvrability is also being linked to artificial intelligence. Among other things, this includes software and sensors that make it possible for robots to be autonomous in dangerous places. This is one of the driving forces behind the military’s use of autonomous systems. The US military has high hopes for machine autonomy because it could provide greater flexibility to people who command and fight alongside robots. American developers are expecting to move from 50 soldiers supporting one drone, unmanned ground vehicle or aquatic robot, as it is now, to a paradigm where one person is supporting 50 robots.

But artificial intelligence could also create serious problems. Military artificial intelligence could potentially accelerate combat to the point where the actions of machines surpass the mental and physical capabilities of those making the decisions in the command posts of a future war. Therefore, technology will outpace strategy, and human and machine errors will most probably merge – with unpredictable and unintended consequences.

A study by the RAND Corporation, which looks at how thinking machines influence deterrence when it comes to military confrontation, highlights the serious problems that could ensue if artificial intelligence is used in the theatre of war. Based on the results of the games conducted, it was shown that actions taken by one party, which both players perceived as de-escalatory, were immediately perceived by artificial intelligence as a threat. When a human player withdrew their forces in order to de-escalate a situation, the machines were most likely to perceive this as a tactical advantage that needed to be consolidated. And when a human player moved their forces forwards with an obvious (but not hostile) show of determination, the machines tended to perceive this as an imminent threat and took appropriate action. The report found that people had to deal with confusion not only over what their enemy was thinking, but with the perception of their enemy’s artificial intelligence as well. Players also had to contend with how their own artificial intelligence might misinterpret human intentions, both friendly and hostile.

In other words, the idea contained in Karel Čapek’s play about robots is still relevant today – it is impossible to predict the behaviour of artificial intelligence. And if “intelligent” robots have a military use, then they could also become a danger to their owners. Even in the US, there are sceptics in the military who are in the camp of traditionalists that believe such innovations by the utopians at Silicon Valley will be harmful to American statecraft.