It’s Time to Protect Yourself — and Your Friends — From Facebook

Remember the Marlboro Man? He was a sexy vision of the American west, created by a cigarette corporation to sell a fatal product. People knew this and used that product anyway, at great detriment to themselves and those around them who quietly inhaled toxic secondhand smoke, day into long night.

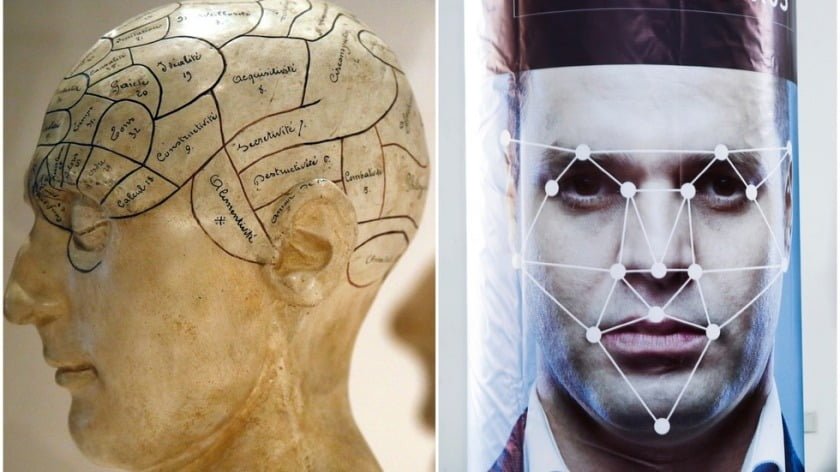

An agreement between states and tobacco companies banished the rugged cowboy at the end of the 1990s, but the symbol is useful even 20 years later as we contend with a less deadly but no less frightening corporate force. Social networks that many of us signed up for in simpler times — a proverbial first smoke — have become gargantuan archives of our personal data. Now, that data is collected and leveraged by bad actors in an attempt to manipulate you and your friends.

The time for ignorance is over. We need social responsibility to counterbalance a bad product. The public learned in alarming detail this weekend how a Trump-aligned firm called Cambridge Analytica managed to collect data on 50 million people using Facebook. All, as the Guardian put it, to “predict and influence choices at the ballot box.” Individuals who opted into Cambridge Analytica’s service — which was disguised as a personality quiz on Facebook — made their friends vulnerable to this manipulation, as well.

There were better days on the social network. When you signed up for Facebook, it’s likely because it was an alluring way for you to connect with old friends and share pictures. You hadn’t ever imagined “Russian trolls” or “fake news” or, lord knows, “Cambridge Analytica.” Chances are, you signed up before 2016, when Wired recently declared the social network had begun “two years of hell,” thanks in no small part to reporting efforts from current Mashable staffer Michael Nuñez.

By then, the vast majority of Facebook’s 239 million monthly users in America had registered, had likely built an entire virtual life of friends and photos and status updates that were primed to be harvested by forces they couldn’t yet see or understand. Unlike those who continued smoking after the Marlboro Man arrived (two years after a seminal 1952 article in Reader’s Digest explained the dangers of cigarettes to the broad American public), these Facebook users lit up before they knew the cancer was coming.

Running with a health metaphor, Wired‘s “two years of hell” feature was promoted with a photo illustration by Jake Rowland that depicted a bloodied and bruised Mark Zuckerberg:

Zuckerberg may have been assaulted from all sides, but we — his users — took more of a licking than he did.

That’s because Facebook’s past two years have been all about ethical and technological crises that hurt users most of all. A favorite editor of mine hated that word, “users,” because it made it sound as though we were talking about something other than people. I can agree with that, but also see now that “users” is the word of moment: Facebook’s problems extend forever out of the idea that we are all different clumps of data generation. Human life is incidental.

Facebook’s problems extend forever out of the idea that we are all different clumps of data generation

The photos you post are interpreted by Facebook’s programs to automatically recognize your face; the interests you communicate via text are collated and cross-examined by algorithms to serve you advertising. Our virtual social connections enrich this marketing web and make advertisers more powerful.

And many of us open the app to scroll without really knowing why. Facebook literally presents us with a “feed.” We are users the way drug addicts are users, and we’re used like a focus group is used to vet shades of red in a new can of Coca-Cola.

None of this has been secret for some time. Braver, more fed up, or perhaps more responsible users have deactivated their Facebook accounts before. But any change they made was on the basis of their experience as individuals. New revelations demand we think more in terms of our online societies.

I have exactly 1,000 Facebook friends, and about 10 actual, best friends I see on a regular basis. It wouldn’t have occurred to me to care much about those other 990 Facebook friends until revelations from the Cambridge Analytica scandal. We have to admit now that the choices we make on Facebook can directly impact others.

The social network’s policies have changed since Cambridge Analytica’s 2016 operation. But Facebook’s business model — gather data on people and profit from that data — hasn’t. We cannot expect it to. But a reasonable person would anticipate it’s only a matter of time until the next major ethical breach is revealed to the public.

We know from bad faith campaigns surrounding Brexit and the 2016 U.S. election that individual users are extremely susceptible to viral disinformation. But until now, it was less clear how Facebook’s own tools could be used by third parties to manipulate an entire network of friends in an attempt to manipulate voter behavior.

Your irresponsibility on Facebook can impact a lot of people. A link you share can catch on and influence minds even if it’s totally falsified; more to this immediate concern, a stupid quiz you take could have opened your friends’ information up in a way they’d never have expected.

You could throw the pack away and deactivate your Facebook account altogether. It will get harder the longer you wait — the more photos you post there, or apps you connect to it.

Or you could be judicious about what you post and share, and what apps you allow access to your account. There are easy ways to review this.

But just remember: There’s no precedent for a social network of this size. We can’t guess what catastrophe it sets off next. Will a policy change someday mean it’s open season on your data, even if that data has limited protections in the here and now?

Be smart: It’s not just you, or me, out there alone.